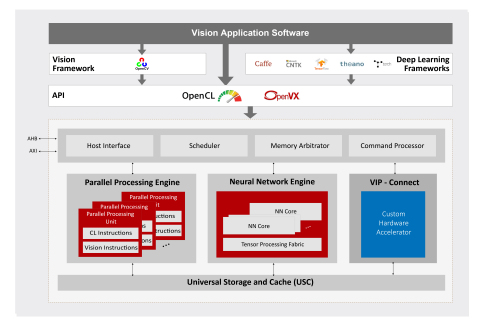

Vivante VIP8000’s VIP-Connect™ interface allows rapid customer integration of application-specific hardware acceleration units to work collaboratively with the standard Vivante VIP8000 hardware units.

The processor is programmed by OpenCL or OpenVX with a unified programming model across the hardware units, including customer application-specific hardware acceleration units. All the hardware units work concurrently, with shared data in cache to significantly reduce the bandwidth.

To better address embedded products across different market segments, Vivante VIP8000 is highly configurable, including through independent scalability of the Parallel Processing Unit, Neural Network Unit and Universal Storage Unit, and the ACUITY™ SDK provides training and a complete set of IDE tools.

“Neural Network technology is growing and evolving rapidly, and Vivante VIP8000’s use cases are expanding beyond the original surveillance camera and automotive customer base. Vivante VIP8000’s superior PPA (Performance, Power, Area), the innovations in bandwidth reduction through patent-pending universal cache architecture, and compression algorithms speed up the movement to enable embedded devices to be AI terminals collaborating with cloud to deliver revolutionary AI experiences to the end user,” said Wei-Jin Dai, Executive Vice President, Chief Strategy Officer, VeriSilicon.

“To enable rapid growth in AI in embedded devices, highly-efficient and powerful programmable engines with industry standard APIs like OpenCL and OpenVX are critical. The efficiency will come from both neural network innovation and increased computing density,” said Jon Peddie, President, JonPeddie Research.

For more information, please visit www.verisilicon.com.